~250-300 ms

Search time went down to under 5 seconds (now: ~250-300 ms)

Our DevOps team optimized the infrastructure for Veeqo, an inventory

and shipping e-commerce platform, and made it highly efficient, smooth,

and cost-effective for our partners.

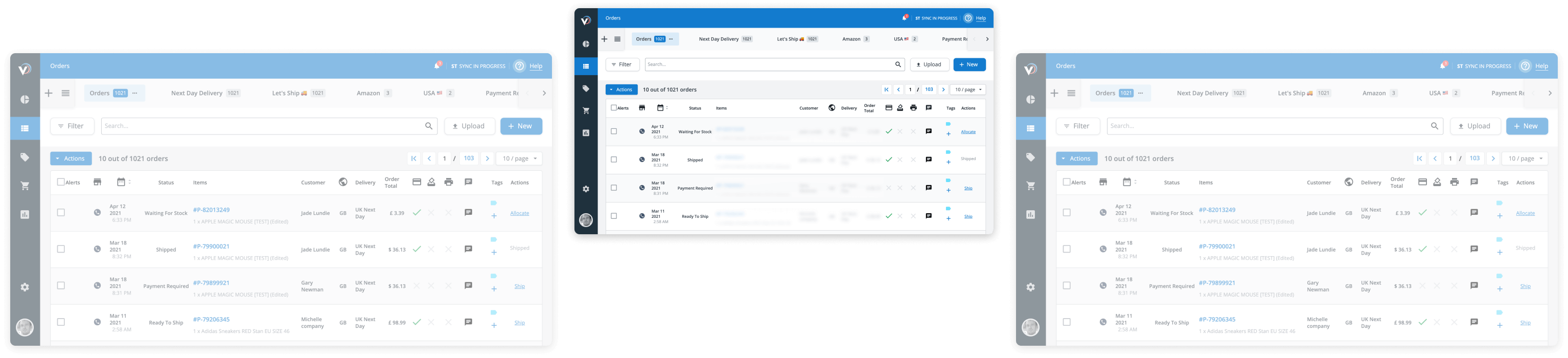

Veeqo is an inventory and shipping platform for e-commerce. It helps businesses to manage sales across multiple channels, ship items via multiple carriers, process refunds, manage B2B orders, forecast inventory—the list of features is almost endless. The platform directly integrates with the world’s most popular retail applications, including e-commerce platforms, marketplaces, shipping carriers, POS systems, and more.

Veeqo is a single platform that gives users complete control of their entire inventory. It enables businesses to quickly bulk ship orders from any sales channel, automate repetitive shipping tasks, and track every delivery in one place.

Hundreds of retailers all over the world use Veeqo to power their inventory and shipping founded

FOUNDED

2013

by experienced ecommerce retailer

OVER

1.5bn

inventory updates processed in Veeqo every year

OVER

31m

items picked, packed and shipped through annually

The service has multiple subsystems integrated into one platform

Dashboard

Subsystems

In making Veeqo the reliable and efficient system it is today, our teams faced serious technical challenges. Addressing them required profound expertise, creative solutions, and intensive work. Particularly important were the contributions of our DevOps engineers.

The role of DevOps specialists is to bring order and predictability to the development process: remove bottlenecks, simplify delivery, and automate as many processes as possible. The goal is to turn the development of features into a smoothly running conveyor belt.

When we joined the Veeqo project, our DevOps team started sorting out existing blockages in the development process, prioritizing those that affected Veeqo’s users and the client’s business the most.

“We needed better monitoring and metrics collection to detect the true reason for the outage. We don’t sweep these things under the rug. We go for root causes.”

Collecting host metrics (CloudWatch and okmeter showed consequences, not causes).

Analyzing the true RAM use and identifying 1,200-1,500 worker connections taking up about 40 GB of RAM.

Adding PgBouncers to reduce the load on the database.

Stabilizing connection pooling by applying ELB and thus distributing traffic more evenly to decrease the number of SPOFs.

As a result, we spent $180 on launching PgBouncers on two c4.large instances behind NLB and saved about 10X the sum in the customer’s monthly costs by: Freeing up about 40 GB of RAM, Postponing the need to upgrade the RDS instance until about 6 months later.

halved for better instance performance

load: reduced due to extra RAM

increased

by 50%

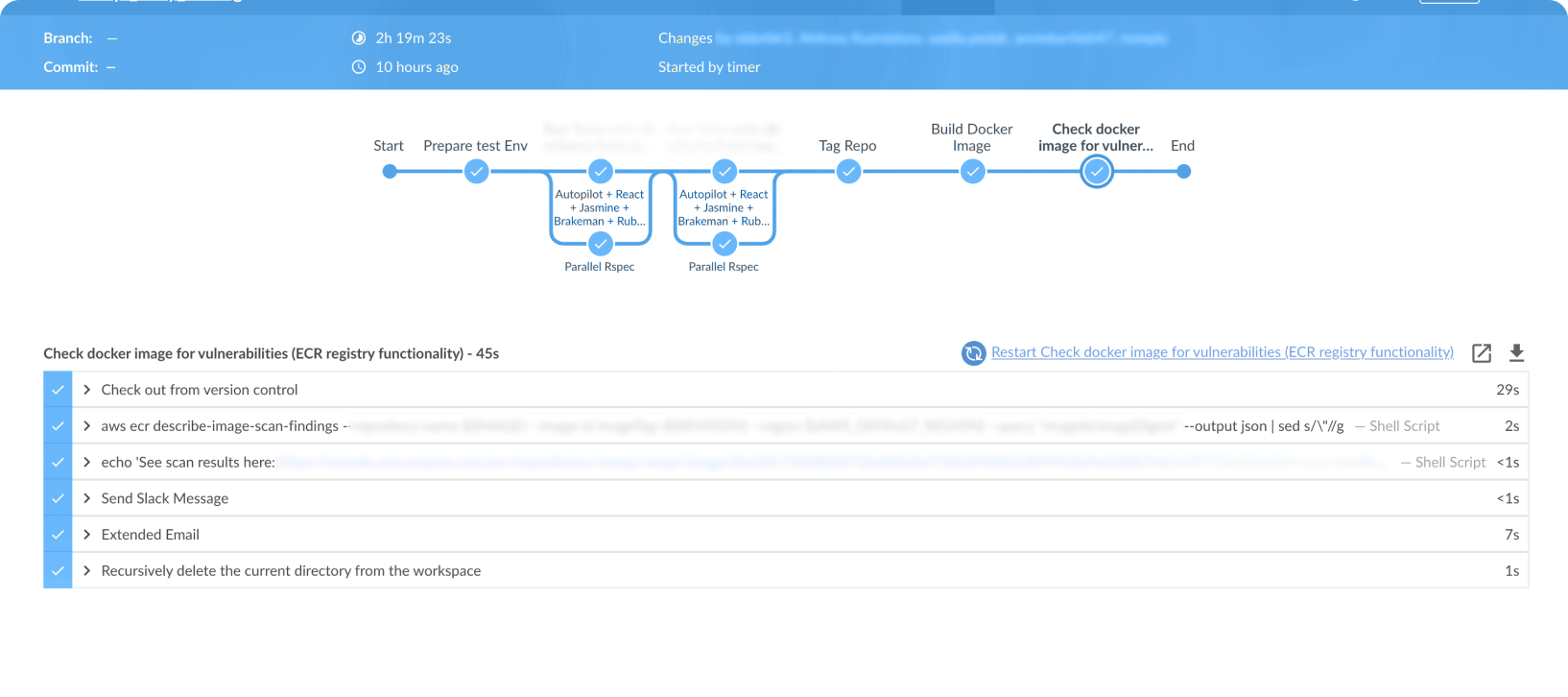

The developers didn’t trust their CI system. CI wasn’t helping them; it was hindering their work instead. It’s just bad DevOps.

Reproducible

CI results

Unified

runtime

Standardized development, test, and production environments

Jenkins was operated manually on a separate EC2 instance. Managing it was thus challenging, especially in emergencies. We moved the Jenkins master to ECS by remaking its provisioning, deployment, and updating (Later, we’ll move it to k8s, but in 2017, ECS was the only good option for us). We reduced build time and cost by allocating a small part of compute resources of Jenkins agents to reserved instances and moving 90% of the load to spot instances.

We further renovated CI by:

Everything down to the last comma is now written in the form of code. Even if we took Jenkins down completely and had to build it from scratch again, it’d take us no more than 10 minutes.

We call it a win-win situation between the development team and the customer’s business.

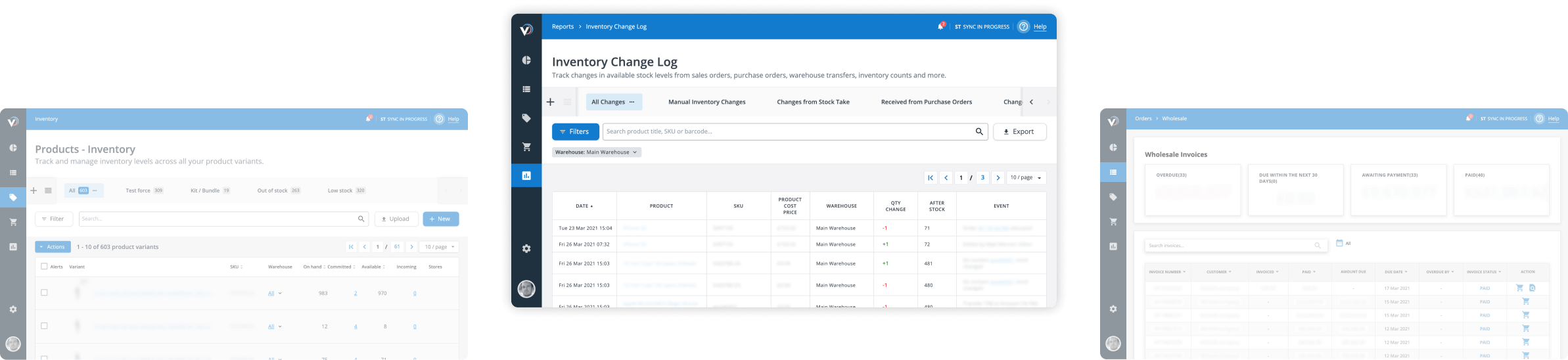

Elasticsearch is crucial in the way users experience the Veeqo platform: the dashboard and the entire interface rely on Elasticsearch. Even if everything else functions flawlessly, delays in the search engine alone cause problems for user experience.

There are two main ways to improve performance: Increase compute resources, Optimize the use of resources

At any given moment DevOps specialists calculate and evaluate cost factors of different solutions. We applied both types of solutions as we started with enhancing the cluster and later optimized indexing to make searching as convenient for users as possible.

We implemented a new Elasticsearch cluster as a self-hosted solution on EC2 instances.

Three reasons:

The indices featured too much unnecessary information and non-optimized mapping.

What we did:

Search time went down to under 5 seconds (now: ~250-300 ms)

We received massive positive feedback as Veeqo users were contacting customer

Elasticsearch became more efficient and reliable without costing the customer more

Now, Elasticsearch is back to three nodes; in fact, two are enough for normal operation, and the third is there to ensure fail-safety.

Cutting the costs for the customer is not about making compromises but about achieving efficiency. The mind of a DevOps engineer is like an hourglass: engineers are focusing on either improving performance or cutting the costs. It’s flipping all the time, and it is needed to find balance, which is solutions that are effective and cheap.

Once we improved Veeqo’s performance and user experience, we started working on optimizing infrastructure costs. In total, the infrastructure used to cost the customer about ~$20,000 per month. Within one summer, as we prepared the new solutions and migrated to them, we brought the figure down to ~$13,000.

Importantly, we made the system more secure as we started using RabbitMQ, Memcached, Elasticsearch, and other services within our own network. The monitoring system that we kept perfecting as we went on with cutting the costs confirmed the effectiveness of cost optimization.

Costs can’t be cut overnight. Not without damaging user experience, service uptime, and system survivability, anyway. We initially told Veeqo how we would decrease monthly infrastructure costs by ~7,000 dollars, but it took months to make smooth transitions. Our Veeqo partners trusted our professionalism, and it all paid off. We’re proud of how we optimized the costs along with improving the system’s performance.

Kubernetes and the team were ready for each other. Kubernetes was growing stable, well-integrated with all the major AWS services, and well-equipped for high production loads. The team, in turn, stabilized the development processes and needed better automation. Kubernetes provided a unified platform for app launching and Docker containers orchestration: we wanted to have a unified platform to safeguard Veeqo’s future growth. Having one platform to handle everything meant being independent of cloud providers and being able to launch instances in any clouds. We were going more cloud agnostic: Kubernetes allows moving workloads seamlessly.

There are four main types of resources: CPU, RAM, disc, and network. The way a service uses them depends on its type. Our objective was to arrange the services so that they don’t overlap in terms of the use of resources and—importantly—so that not too many resources are idle.

There’s nothing more expensive than idle resources. At the same time, you don’t want to load the nodes to their maximum capacity because a) it’ll lead to performance degradation and b) you do need some idle resource to handle load spikes. Kubernetes finds balance.

The balance is found based on thorough continuous calculations. Kubernetes shuffles services from node to node so that resources are not overused and creates optimal distribution that a human admin could never possibly achieve manually.

Kubernetes specializes in resource management and orchestration, but it needs a containerization service alongside it. We used Docker to standardize the runtime for application. The two worked perfectly together.

Kubernetes provides an environment where Ops and Devs can speak the same language: the language of YAML configurations and Kubernetes objects. We get system resources on one end, containers on the other, and in the middle, Kubernetes works its magic.

IaC is a way to manage large structures where manual operation is highly ineffective or simply impossible. To go fully IaC, our teams made fundamental changes in the ways we develop, deliver, and maintain our solutions.

We are proud of our work with Veeqo and eager to share our successes. As our partnership continues, we’re constantly looking for new ways to meet Veeqo’s business needs and make its users’ experience better.

PostgreSQL

40 GB of RAM freed up. ReadIOPS halved. Number of TPS increased by 50%

Infrastructure costs

without losses in performance and security

Elasticsearch

More secure data access Search time decreased initially to under 5 seconds and by now to ~250-300ms

Ruby

NodeJS

Elasticsearch

PostgreSQL

RebbitMQ

Redis

Memcached

Cloudwatch

Prometheus

Grafana

Sentry

Heroku

AWS

Kubernetes

Terraform

Travis CI

Jenkins

Docker

Helm

Maxim Glotov

DevOps engineer

Andrew Sapozhnikov

CIO

The resource provided by Mad Devs is excellent, bringing not only their own skill and expertise, but the input of the wider team to the project too. They manage the remote work seamlessly and fit well into the company’s workflow.